In the November 1, 2023 issue of the Washington Post, Nitasha Tiku, Kevin Schaul, and Szu Yu Chen wrote an article entitled, This is How Image Generators See the World. The subtitle: These fake images reveal how AI amplifies our worst stereotypes.

They wrote:

“Artificial intelligence image tools have a tendency to spin up disturbing clichés: Asian women are hypersexual. Africans are primitive. Europeans are worldly. Leaders are men. Prisoners are Black.

These stereotypes don’t reflect the real world; they stem from the data that trains the technology. Grabbed from the internet, these troves can be toxic — rife with pornography, misogyny, violence and bigotry.”

As someone who has studied and presented on the impacts of rater bias on the fair assessment by standardized patients during simulated medical student clinical encounters, I wonder how AI represents our collective biases.

Although my experience with AI-generated images so far is not as alarming as the WAPO writers suggest, biases can be revealed based on the datasets used to program AI. It learns from us by aggregating data from multiple sources. It is like Google on steroids.

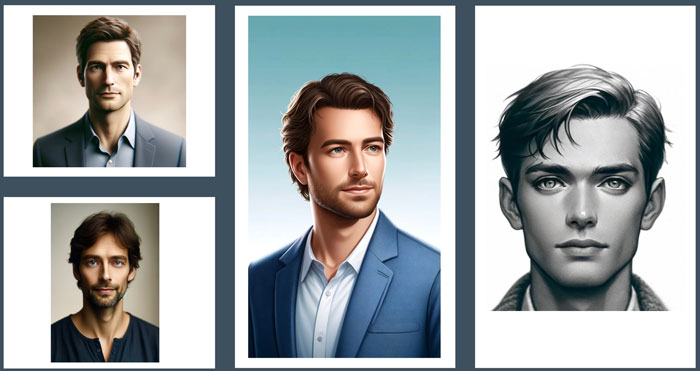

Here’s an experiment. I asked Open AI’s Image Generator to create images of “generic males.” This is what it created:

I then asked ChatGPT to tell me how those images represent our implicit biases. Here is the response:

In the provided images of AI-generated males, several types of implicit biases might be revealed:

Conventional Attractiveness: The men depicted in these images adhere to certain beauty standards, which tend to favor symmetrical features, strong jawlines, and full hair. These characteristics are often associated with societal ideals of male attractiveness.

Age Bias: All of the individuals appear to be young or middle-aged, which may indicate a bias towards representing men within a certain age bracket, potentially marginalizing older age groups.

Ethnic Diversity: Depending on the dataset the AI was trained on, there may be a lack of diversity in skin tone, ethnic features, and hair types. The men in these images seem to represent a narrow range of these characteristics.

Socioeconomic Assumptions: The men are presented in clean, well-groomed appearances with what appears to be business-casual attire. This could suggest an implicit bias towards certain socioeconomic classes or professions.

Cultural Representation: The attire and hairstyles may reflect a westernized view of men's fashion, indicating a potential bias towards Western cultural standards.

Emotional Expression: The expressions of the AI-generated men tend to be neutral or slightly positive, which could reflect biases in emotional representation for men, where they are often portrayed as stoic or unemotional.

ChatGPT provided this disclaimer: It's important to note that these observations are speculative, as they are based on a small sample of images and AI's output is a reflection of the data it was trained on. To mitigate such biases, it's crucial for AI developers to use diverse and inclusive datasets.

I then asked the Image Generator to create images of “generic African-American females.”

What implicit biases might they reveal in these images?

ChatGPT responded: In the provided AI-generated images of women who appear to be of African descent, some implicit biases that might be revealed include:

Beauty Standards: The women depicted in these images are all portrayed with smooth skin, symmetrical features, and make-up, which aligns with conventional and possibly narrow definitions of beauty. This may suggest a bias towards these standards while neglecting a broader spectrum of natural appearances.

Hair Texture and Style: The women have different hairstyles that range from curly to straight. This variety can be seen as a positive reflection of diversity; however, if straight hair is predominantly featured in AI-generated images over natural hairstyles, it could reveal a bias towards Eurocentric beauty standards.

Age Representation: Similar to the male images, all the individuals appear to be in the same age range, suggesting a potential bias that favors the representation of youth.

Skin Tone: The range of skin tones present in the images does show some diversity; however, biases can often be seen in AI-generated images if there is a lack of representation across the full spectrum of skin tones found in the African-American community and other communities of color.

Cultural Diversity: There may be subtle indications of socioeconomic status, such as clothing and jewelry, which can reflect assumptions about class and social status.

Facial Expression: The facial expressions in these images are friendly and approachable, which can be positive. However, if AI-generated images predominantly feature certain expressions, it might unintentionally perpetuate stereotypes about demeanor and personality.

The disclaimer: It's important to note that the presence of bias in AI-generated images does not necessarily indicate malicious intent but rather reflects the data the AI was trained on. As AI and machine learning technologies continue to evolve, there is a growing effort to ensure that the datasets used for training are diverse and representative of different demographics to reduce the perpetuation of stereotypes and biases.

______________

AI-generated art can reveal implicit biases, largely because the datasets used to train these AI systems often contain biases that are present in human culture. It has the potential to reflect back to us who we are and how we construct our world.

Continue the discussion on Linkedin: What biases do you believe are reflected in the images?